WE NEED TO TALK ABOUT KELP (AND CUSTOM BATCHING)

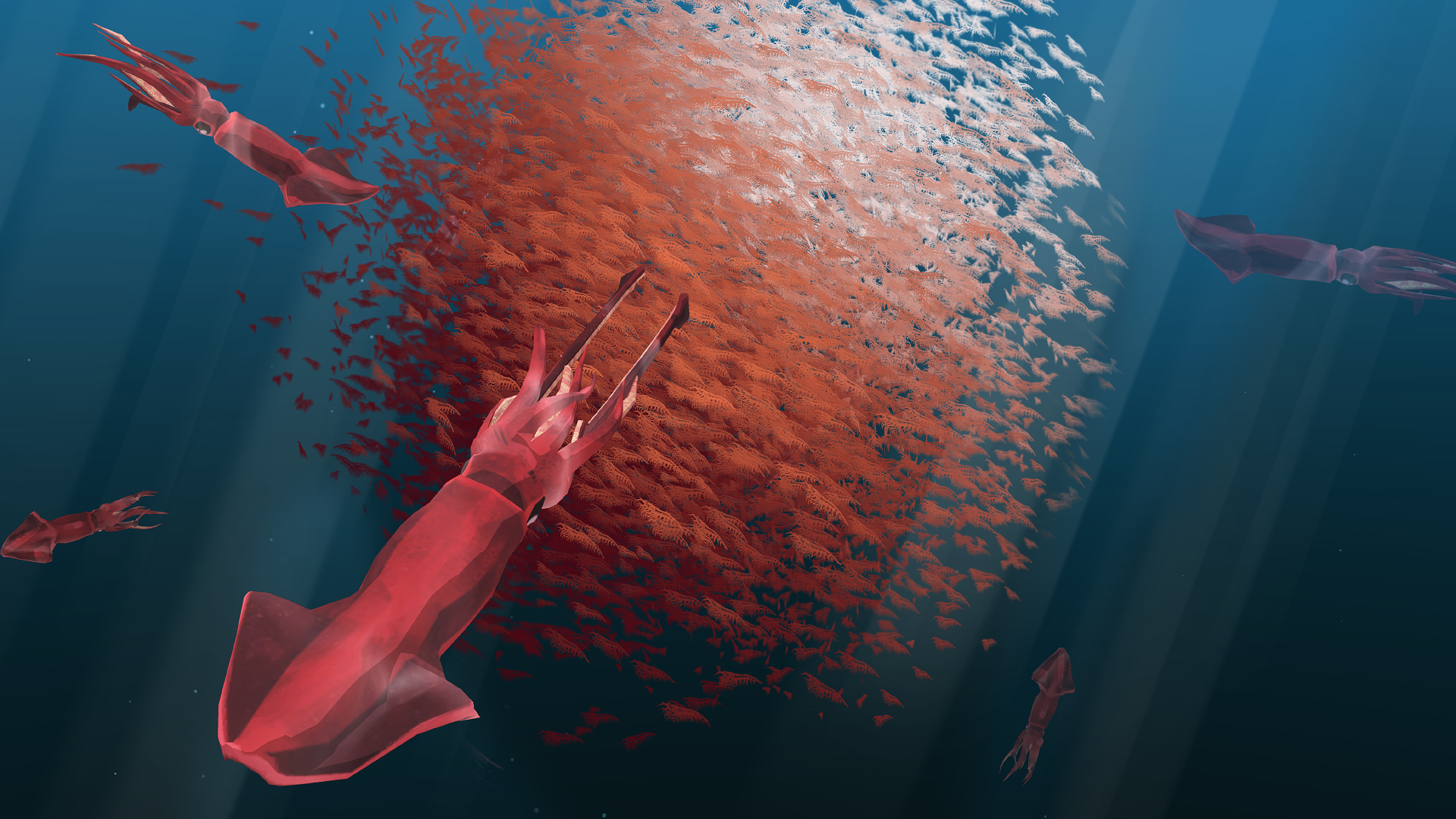

If I were to say ‘kelp’ in the studio, you would probably see a sway of people pinching their nose bridges or reaching for bottles of whiskey. Early on in development, we discovered that the quantity of kelp branches needed to make up the rich underwater environment just wasn’t going to be possible, even by using billboarding techniques. Some of our first attempts yielded results of 20fps (frames per second). In essence, using transparent textures broke batching impacting performance. An obvious solution to use 20 branches of Kelp, as opposed to the 600+ branches we ended up using, wasn’t going to cut the mustard!

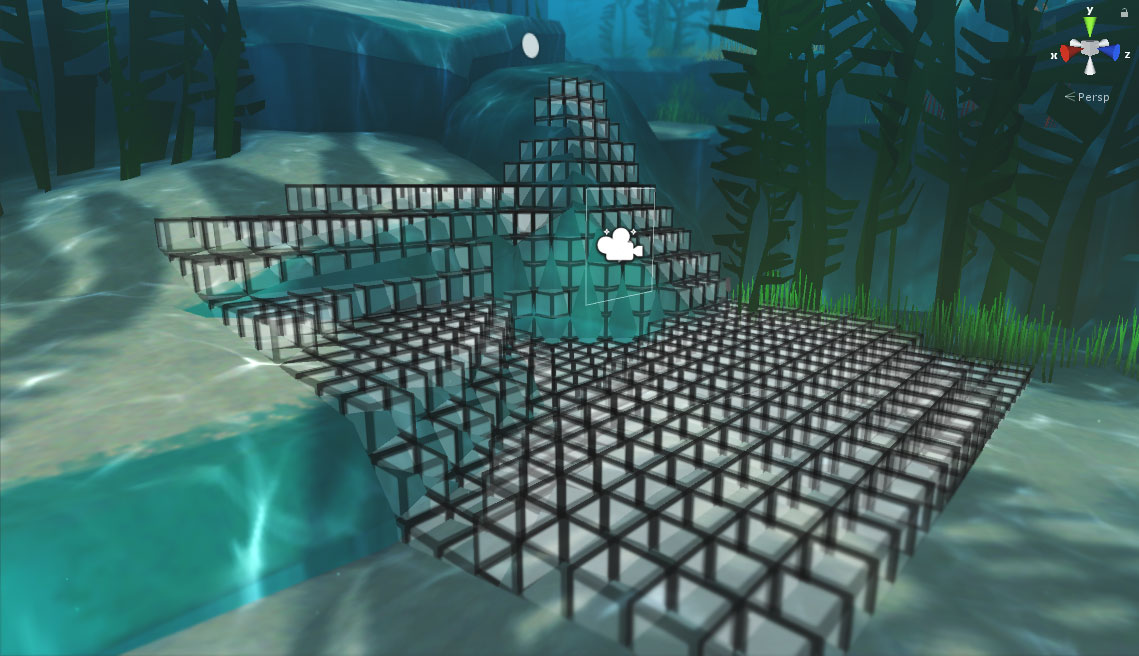

We opened up PRELOADED’s ‘Visual tricks and optimisation for mobile’ play book, making sure we had ticked off all the usual suspects: reducing the poly count on kelp models; implementing shaders for sea current simulations flowing through the branches; GPU instancing. They all helped, but we needed more juice. A few months later, a custom mesh batching system was developed. This could have different settings fed to it at runtime, allowing us to cater for lower end DayDream devices.

Because of our continued optimisation, we had enough grunt in 6DOF to allow us to swap out static meshes for cloth physics – allowing players to push their head through kelp on 6DOF headsets.

To pull everything together and provide a visual grade, we developed a custom fogging system that allowed you to ramp through colour not only in depth, but also in height. This helped reinforce story beats through the experience as you journeyed deeper into the ocean.

Digging deep into our arsenal of visual tricks, we finally rolled out a custom light baker that allowed us to quickly zone areas of scenery within the Unity editor and export to Maya to allow us to render high-quality bakes.